In a given day, I tend to work primarily on Laravel apps, some using Livewire and some with Inertia.js and a Vue.js frontend, as well as a smattering of WordPress sites and/or custom plugins.

I’ve tried PhpStorm and didn’t care for it, so like what feels like 90% of the rest of the industry, I use VS Code as my primary editor.

Here’s a list of the extensions I use on a daily basis:

General

Sublime Text Keymap and Settings Importer: I used Sublime Text for a year or so and built up muscle memory for the keyboard shortcuts, so these make a lot more intuitive sense to me than the standard VS Code keyboard shortcuts.

Markdown Preview Mermaid Support: I like to document solution architecture using mermaid diagrams, and this is a great extension to preview these in VS code.

Path Intellisense provides autocompletion when typing relative file paths in a project.

EditorConfig for VS Code configures some editor settings for different projects based on the configuration stored in the project.

Prettier – Code formatter is useful for automatically formatting code files.

TODO Highlight v2 provides visual feedback for TODO/FIXME/etc. comments in code.

Encode Decode is extremely useful when dealing with encoded strings. I use it fairly frequently to decode base64-encoded strings.

Remote – SSH / Remote – SSH: Editing Configuration Files / Remote Explorer make it really easy to “cowboy code” on a server 😬 and are useful for occasional debugging in production.

Live Share is amazing for pair-programming: it allows you to open the same codebase your colleague is working on and work with it on your machine just as if it were a local project.

PHP

Composer shows you the actually-installed version of each package in your composer.json file, and gives you a quick link to the packagist.org page for each.

PHP DocBlocker reduces some of the boilerplate necessary when writing docblocks.

PHP Intelephense in my opinion is significantly better than the built-in PHP language support, providing autocompletion for functions, methods, variables, etc., project-wide parameter hints, and even some static analysis features.

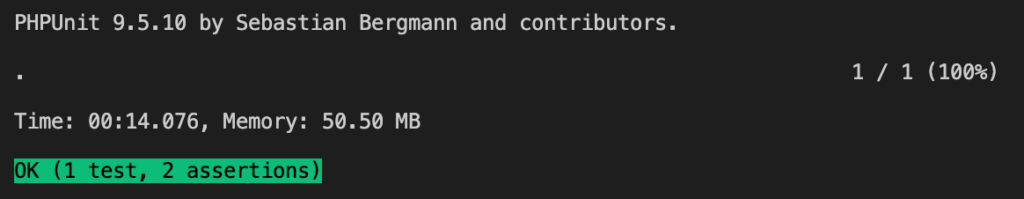

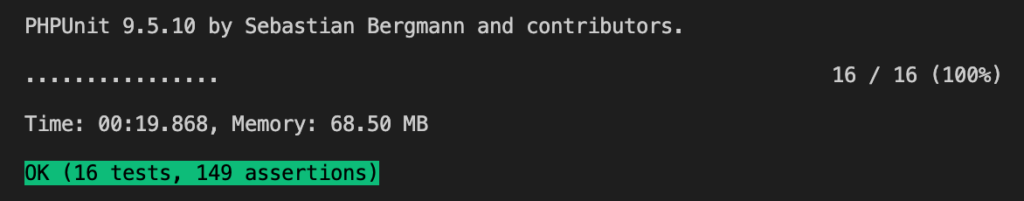

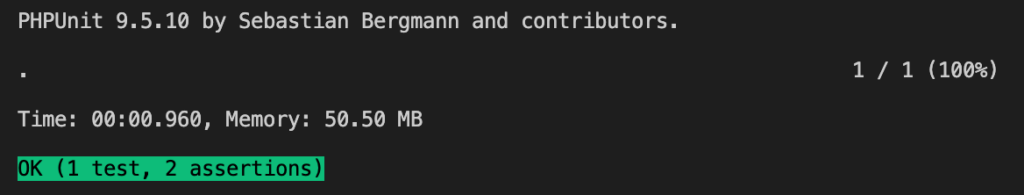

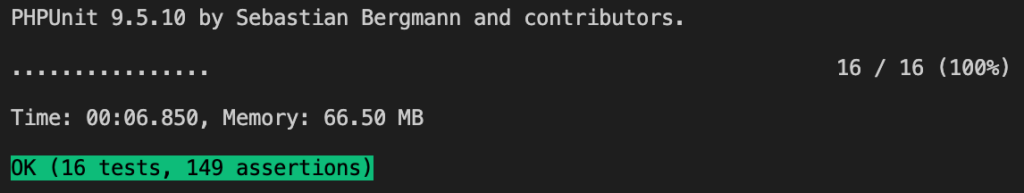

PHPUnit Test Explorer is a very useful wrapper for phpunit; you can run a single test, a single file, or the entire test suite, and when combined with Test Explorer UI and Test Adapter Converter, shows a list of passed/failed tests in the sidebar. I use it frequently to run my entire test suite to see a quick list of which job(s) failed.

phpstan runs static analysis on files as I save them, showing my errors as I write code.

PHP Debug might just be the extension I interact with the most; it lets me set breakpoints, step through, and inspect code as it runs. I can’t imagine trying to program without it.

Laravel

DotEnv provides syntax highlighting for .env files used for Laravel configuration.

Laravel Extra Intellisense saves me a lot of time by auto-completing routes names and parameters, configuration keys, views and variables, validation rules, and more.

Laravel Blade Spacer automatically adds spaces when you add a new curly brace pair: just a minor code style convenience.

Livewire Language Support provides autocompletion and other features for Livewire projects.

Laravel goto view provides one-click access to views from controllers.

Laravel Blade Snippets provides Blade snippets and syntax highlighting.

Laravel Pint provides automatic code formatting using Pint.

Laravel Blade formatter provides formatting tools for Blade templates.

Javascript

Alpine.js IntelliSense provides intellisense and snippets for alpine.js.

Inertia.js provides support for linking to vue templates and autocompletes component names.

Other Languages

GraphQL: Syntax Highlighting provides language support for GraphQL files.

SCSS IntelliSense autocompletes mixins, functions, etc. in sass files.

YAML provides YAML language support.

SQL Beautify provides formatting support for SQL files. I don’t always love the output, but it’s better than nothing.

Vue Language Features (Volar) provides language, autocompletion, and other features for vue framework.

Markdown All in One provides keyboard shortcuts, formatting helpers, preview, and more for markdown files.

Tailwind CSS IntelliSense provides suggestions, highlights duplicates, and more for Tailwind class names.

Git

GitLens — Git supercharged provides some great features; my favorite is the code history on the active or hovered line.

GitLab Workflow is a wonderful integration with GitLab; I use the “copy active link to clipboard” feature daily to copy a permalink for specific line(s) when discussing code with my colleagues. It also provides helpful CI features including autocompletion and hints when editing .gitlab-ci.yml files, as well as showing pipeline/job status right in the VS Code status bar.